What is the Burp Suite?

Burp Suite is an integrated platform for attacking web applications. It contains all of the Burp tools with numerous interfaces between them designed to facilitate and speed up the process of attacking an application. All tools share the same robust framework for handling HTTP requests, persistence, authentication, upstream proxies, logging, alerting and extensibility.

Burp Suite allows you to combine manual and automated techniques to enumerate, analyse, scan, attack and exploit web applications. The various Burp tools work together effectively to share information and allow findings identified within one tool to form the basis of an attack using another.

Source: http://www.portswigger.net/suite/

The Burp Suite is made up of tools (descriptions take from the Port Swigger website):

Proxy: Burp Proxy is an interactive HTTP/S proxy server for attacking and testing web applications. It operates as a man-in-the-middle between the end browser and the target web server, and allows the user to intercept, inspect and modify the raw traffic passing in both directions.

Spider: Burp Spider is a tool for mapping web applications. It uses various intelligent techniques to generate a comprehensive inventory of an application’s content and functionality.

Scanner: Burp Scanner is a tool for performing automated discovery of security vulnerabilities in web applications. It is designed to be used by penetration testers, and to fit in closely with your existing techniques and methodologies for performing manual and semi-automated penetration tests of web applications.

Intruder: Burp Intruder is a tool for automating customised attacks against web applications.

Repeater: Burp Repeater is a tool for manually modifying and reissuing individual HTTP requests, and analysing their responses. It is best used in conjunction with the other Burp Suite tools. For example, you can send a request to Repeater from the target site map, from the Burp Proxy browsing history, or from the results of a Burp Intruder attack, and manually adjust the request to fine-tune an attack or probe for vulnerabilities.

Sequencer: Burp Sequencer is a tool for analysing the degree of randomness in an application’s session tokens or other items on whose unpredictability the application depends for its security.

Decoder: Burp Decoder is a simple tool for transforming encoded data into its canonical form, or for transforming raw data into various encoded and hashed forms. It is capable of intelligently recognising several encoding formats using heuristic techniques.

Enabling the Burp Suite Proxy

To begin using the Burp Suite to test our example web application we need configure our web browser to use the Burp Suite as a proxy. The Burp Suite proxy will use port 8080 by default but you can change this if you want to.

You can see in the image below that I have configured Firefox to use the Burp Suite proxy for all traffic

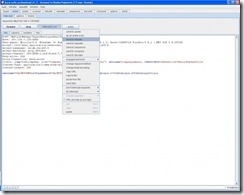

When you open the Burp Suite proxy tool you can check that the proxy is running by clicking on the options tab

You can see that the proxy is using the default port:

The proxy is now running and ready to use. You can see that the proxy options tab has quite a few items that we can configure to meet our testing needs

Now the main phase we login to a facebook,orkut,myspace or any other website’s account and try to get username and password using burp..lets see how i do it …let the Hacking begin::{its only for study purpose dont misuse it}

To do this we must ensure that the Burp Suite proxy is configured to intercept our requests:

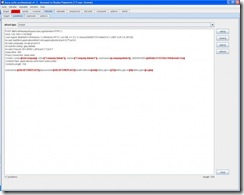

With the intercept enabled we will submit the logon form and send it to the intruder as you can see below:

The Burp Suite will send our request to the intruder tool so we can begin our testing. You can see the request in the intruder tool below:

The tool has automatically created payload positions for us. The payload positions are defined using the § character, the intruder will replace the value between two § characters with one of our test inputs.

The positions tab which is shown in the image above has four different attack types for you to choose from (definitions taken from

http://www.portswigger.net/intruder/help.html) :